Semantic Communications in AI Systems

In this research, we develop what I would like to call as post Shannon Era Communication, in which communication can be made more efficient by expanding beyond just transmission of bits (i.e., Shannon Era communication) and thinking of it as conveying information of an agent (i.e., a wireless network node) beliefs/goals/intents via grounded interactions, i.e., Semantic communication for reasoning and decision making. Semantic communication will be an integral part of 6G wireless networks which is expected to transform and revolutionize the evolution of wireless from ''connected things'' to ''connected intelligence'' where AI-empowered wireless networks will enable interconnections between humans, things, and intelligence within a deeply intertwined and hyper-connected cyber-physical world. A hyper-connected digital system would require extra low latency, minimal power consumption and prudent use of bandwidth. Even though recent developments in 5G systems are impressive first steps in the right direction, latency and power requirements still do not suffice to carry the workload of future distributed systems. Our research has been focused on a new framework, namely semantic communications, that takes the meaning and usefulness of transmitted messages in an AI system into account. In particular, we have been investigating communication schemes such as Joint Source-Channel Coding, and functional compression that utilize the power of neural networks to reduce communication overhead and transmission power by molding the information communication problem to the specific machine learning (ML) task in hand. These frameworks function by generating intermediate representations that mostly contain relevant and useful information for the target ML task. However, these schemes lack the connection to rational decision making in AI systems. Our next generation of semantic communication framework will further advance our current works by developing information theory and communication schemes that are particularly tailored to rational decision-making agents in AI systems. At the core of this semantic communication is a neuro-symbolic logical reasoning engine, which our team have been developing as a differentiable Inductive/abductive Logic Reasoning framework since 2016.

Semantic Communications for Rational Decision Making in AI Systems

As the evolution of wireless is progressing from ‘connected things’ to ‘collaborative intelligence’, where AI-empowered wireless networks enable interconnections among humans, objects, and intelligence in a deeply intertwined and hyper-connected cyber-physical world, we expect that semantic communications will play a pivotal role. A hyper-connected digital system would require extra low latency, minimal power consumption and prudent use of bandwidth. Even though recent developments in 5G systems are impressive first steps in the right direction, latency and power requirements still do not suffice to carry the workload of massive distributed systems. Furthermore, with ever increasing demand, internet bandwidth, once considered a cheap commodity, has started to turn into a very expensive utility. At this point, it is reasonable to say classical Shannon-era (Level A) communication has fulfilled its purpose and physical limits became the throttle in improving speeds. Hence, a new framework, namely semantic (Level B) communications, that takes the meaning and usefulness of transmitted messages into account, is necessary. The principle in existing works in semantic communication is to design encoders that generate intermediate representations that mostly contain relevant and useful information for the target machine learning task. However, these schemes lack the connection to rational decision making, and hence do not permit for a proper theoretical characterization of what kind of and how much information is needed during the decision-making process by a rational agent. Our research is focused on introducing the theoretical foundation as well as the communication schemes that would take these issues into the account.

NSF-MLWIN Projects

Functional Compression and Collaborative Inference over Wireless Channels

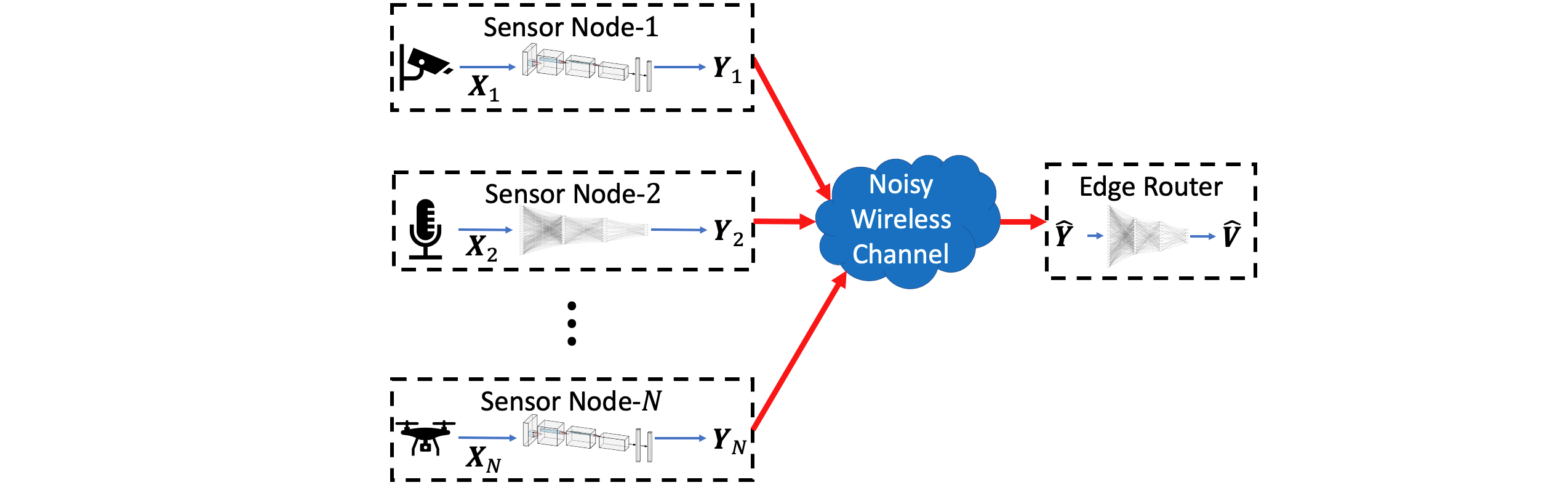

We investigate distributed deep model training over a wireless edge network for distributed inference. Specifically, we assume local nodes who observe possibly correlated signals. On the other hand, a central receiver, referred to as the central server, is interested in computing a function of those local node observations. The local nodes have to independently encode their observations and transmit them to the central server over a wireless channel. We call this collaborative inference since the final function value depends on all the local nodes’ observations. We are particularly interested in those functions that do not have simple closed forms, for example, the classification function, object detection. We leverage Kolmogorov-Arnold representation theorem to establish that a model trained distributively can perform as good as a centralized trained model, in which all observed network data are transferred to a single node for training. Clearly, transferring the compressed sensor data to edge router is not a viable option due to latency, channel use, and privacy constraints. Therefore, the fundamental question is as to how we can collaboratively train the local encoders and the global decoder at the wireless edge router via neural network without explicitly communicating the massive training data that is split among several nodes in a wireless edge. As such, the wireless edge network, as a machine-learning engine, will use the training data from each edge node to train and form a distributed deep model that can later be used for collaborative inference by the entire network.

We study three different training objectives to train the encoders and the decoder of the above setup for communication efficient inference. We theoretically compare the methods with the Indirect Rate-Distortion problem where the nodes are also assumed to communicate with each other. We show that the Variational Information Bottleneck based training scheme is the best. In the nutshell, the framework is designed based on the principle that we wish to encode the local node data such that we transmit only minimal useful information that the edge router needs to compute the value V=f(.) reliably, hence the name functional compression. Note that in traditional approaches that deploy source-channel compression techniques, the local node’s data is required to be recovered at the edge router. This requirement unnecessarily burden the wireless network. However, in functional compression, we do not require to recover the local data but yet are able to compute the value V that the objective task desires to compute.

Privacy Aware Functional Compression over Wireless Channels

In this project, we investigate privacy constraints in the distributed functional compression problem, where the local node(s) are looking to hide their private attributes correlated with the function value that needs to be computed at some global server. Again, the goal is to send minimal information from local nodes to the server so that server can compute the function that depends on data from all local nodes but yet respect the privacy of local nodes. We first study the single node and receiver (Global Server) problem. We employ the principles of Generalized Information Bottleneck for privacy aware functional compression. Here, we impose the privacy constraint as a Mutual Information constraint between the received signal at the global server and the local private attribute(s) chosen for hiding. We propose a novel upper bound for this mutual information and apply it along with an adversarial bound to achieve a state-of-the-art privacy-utility trade- off. We then return to the distributed functional compression problem and devise a mechanism to decompose the bounds to enable communication-free computation at the local nodes. Using this, we show a state-of-the-art privacy-utility trade-off in the distributed scenario.

We also extend this principle to privacy-aware federated learning in wireless networks, where we train the local node models for the machine learning task in hand while preventing the local nodes’ private attribute to leak to an adversary residing at the parameter server.

Communication Bottleneck in Distributed Training of Deep models

One of the main challenges in distributed training is the communication cost due to the transmission of the parameters or stochastic gradients (SGs) of the deep model for synchronization across processing nodes (a.k.a. workers or wireless edge nodes). Compression is a viable tool to mitigate the communication bottleneck. However, the existing methods suffer from a few drawbacks, such as increased variance of SG, slower convergence rate, or added bias to SG. In our research, we address these challenges from three different perspectives such as (i) Information Theory and the CEO Problem, (ii) Compressing the SGs via Low-Rank Matrix Factorization and Quantization, and (iii) Compressive Sampling.

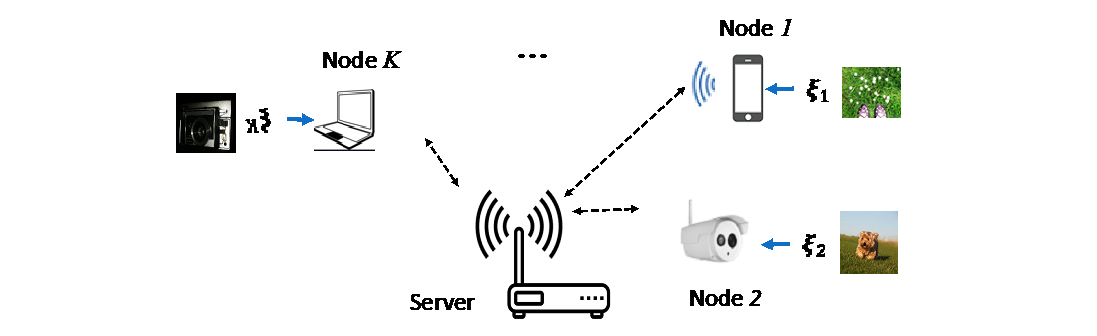

In a related project, we also consider federated learning over wireless multiple access channels (MAC). Efficient communication requires the compression algorithm to satisfy the constraints imposed by the nodes in the network, communication channel, and data privacy. To satisfy these constraints and take advantage of the over-the-air computation inherent in MAC, we propose a framework based on random linear coding and develop efficient power management and channel usage techniques to manage the trade-offs between power consumption, communication bit-rate, and convergence rate of federated learning.

RePurpose: Communication Bottleneck in Collaborative Inference

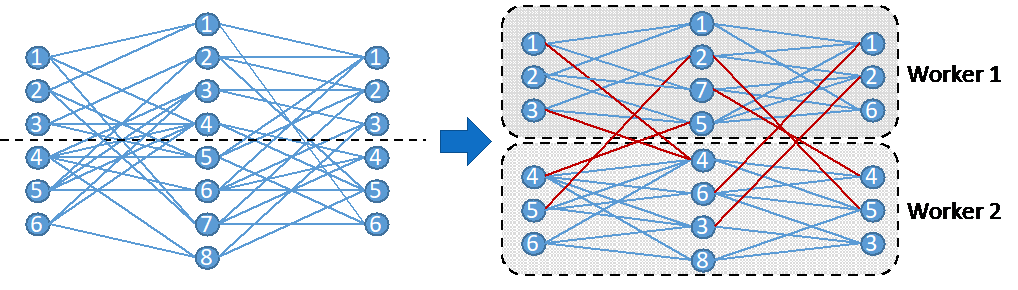

In this project, we develop a framework for Deep Model Restructuring and Adjustment for Distributed/Collaborative Inference in wireless networks. While the complexity of modern deep neural networks allows them to learn complicated tasks, the computational complexity and memory footprint limit their usage in many real- time applications as well as deployment on wireless edge networks with edge nodes having limited resources. Moreover, in some real-world scenarios, such as sensor networks, the inference is done on the data observed by the entire network. However, transferring all data to a central powerful node to perform the ML task is undesirable due to the sheer amount of data to be collected, limited computational power, as well as privacy concerns. Hence, it is more favorable to develop a distributed equivalence of a trained deep model for deploying over the sensor network. In this project, we consider the distributed parallel implementation of an already-trained deep model on multiple wireless nodes at the edge. As such, the deep model is divided into several parallel sub-models, each of which is executed by a wireless node. Since latency due to synchronization and data transfer among wireless nodes negatively impacts the performance of the parallel implementation, it is desirable to have minimum interdependency and communication among wireless nodes who execute parallel sub-models. To achieve this goal, we develop and analyze RePurpose, an efficient algorithm to rearrange the neurons in the neural network and partition them (without changing the general topology of the neural network) such that the interdependency among sub-models is minimized under the computations and communications constraints of the wireless nodes.

Machine learning for Joint Source-Channel Coding (JSCC) over AWGN channels

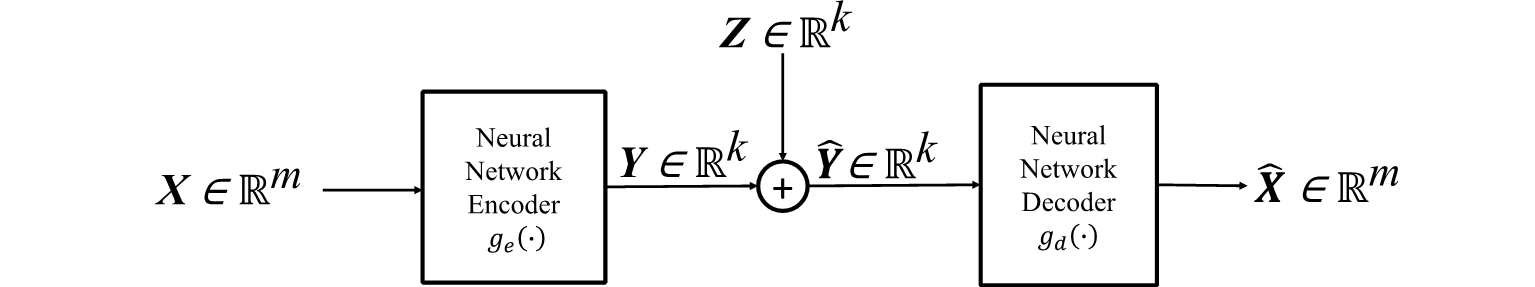

In this research, we seek to design deep learning based encoders and decoders for communication and collaborative inference over wireless channels by using Information-Theoretic insights. In particular, we consider the problem of Joint Source-Channel Coding (JSCC) over AWGN channels. Here, a transmitter observes a signal and processes the observation for transmission over an AWGN channel. The receiver, in turn, attempts to reconstruct the observation. We show that this encoder-decoder setup is similar to a Variational Autoencoder (VAE), and the resulting minimization objective is an upper bound on the one from Shannon’s Rate-Distortion problem. We also advocate the need for discontinuous encoding maps in Gaussian JSCC and propose a mixture-of-encoders system to facilitate the learning of the encoders and the decoder. Our experimental results show that the proposed scheme is superior to existing methods for JSCC of Gaussian sources, Laplacian sources, and images.

Next, we consider the problem of distributed JSCC over a Gaussian Multiple Access Channel (GMAC). In this problem, multiple nodes observe correlated signals and encode them independently for transmission over the GMAC. Building upon the VAE insights proposed earlier, we propose the first JSCC of distributed Gaussian sources learned using optimization that does not require complete knowledge of the joint distribution of sources and achieves state-of-the-art performance. We also propose two novel upper bounds on the optimal distortion for JSCC of distributed Gaussian sources over a GMAC, one of which is a generalization of an existing bound. We show that the new bound is empirically tighter than the generalized bound.